Micro-Deconstruction

Michael Wright

Introduction

Throughout the eons, music has evolved alongside humanity’s obsession with technology and the various tools we have developed to further our ability to survive. The rise in new technologies has also given birth to new artforms, one of the newest of which is video games. While music has accompanied other artforms, its forms within those frameworks have generally resembled the same forms it inhabits on its own. The interactive nature of video games, however, creates a unique space where a game’s accompanying music often has an undetermined form. This lack of form could be considered a type of improvisation, and even resembles something like some forms of blues, where there are musical set pieces and standards that each player has learned, but each piece can be employed by a player at their own discretion, rather than according to a pre-determined structure.

Inception

For this project, I wanted to explore and exploit this type of electronic improvisation more deeply as a composer. While the composition is the central part of this project, as well as the final output, most of the work revolved around designing the system itself, and the instructions given to the software controlling it; it is effectively a type of software programming, only that it is done with a graphical interface, rather than writing the code myself. The software I used for this, called middleware because it lives between the music and the game), was FMOD. From the beginning, I had planned to implement the final output into Stefan Johansson’s game-in-progress he has been developing, and unfortunately due to schedule conflicts on his part, we were unable to do that before the submission date, though we still expect to do that in the coming weeks.

Throughout this paper, I will be defining important terms, but I will first need to layout some key definitions. From nearly their onset, there have been essentially 2 core compositional techniques used to exploit this interactivity, respectively called vertical re-orchestration and horizontal re-sequencing. Vertical re-orchestration, simply put, composition technique where ‘a musical composition is divided into a number of different layers’ (Type, 2023) to reflect a game’s changing environment, such as the melody moving from a flute to a violin; horizontal re-sequencing essentially uses different sections of music to the same end, where, if ‘an event is triggered while a track is playing the playhead will "jump" from that track to another one’ (ibid), i.e., game event A leads to musical section A, and event B to section B (and subsequent occurrences of each even will lead to their respective musical material—or even to a re-orchestration or new motivic development of the same material). A third approach is algorithmic, or generative composition, which is non-linear ‘music that generates in response to the player's actions in real-time’ (Pasquier, 2022), and has also been considered a type of aleatoric music (Phillips, pp. 32). This is most exemplified in a game like Spore (2008), which officially is a ‘life simulation, real-time strategy, god simulator’ game, where players essentially control the evolution of species and guide them in a direction of their choosing; because it is a game that is designed around creating many possibilities, many of which are procedurally generated (where, much like generative music, the game is able to create new scenarios and combinations based on its algorithms). The music is designed to reflect this, with a significant amount of small musical pieces created, that the game recombines based on its internal programming. My overall approach blended all 3 styles, being semi-generative in that the game can recombine my pre-composed modules in a variety of different ways.

While these techniques are well established and well researched, I wanted to explore them in a more extreme form. Since these techniques are inherently modular, and thus built on modules of varying sizes, I wanted to build a system that can react to changes in the game as quickly as possible, and to do that, I need to break the composition down into the lowest common denominator, or smallest possible pieces. In this case, I found that to be 1 bar; it is possible to break it down further, but I have found in my own work on other projects that it can be difficult to convey any sense to time or rhythm in those cases, and to save that approach for compositions that do not rely on these.

The Project

The most difficult part of this process was not the composition itself, but rather how the different pieces of the composition would be pieced together within the game. The game itself is a rather simple game with relatively few possibilities. As such, I felt it would be best to keep the elements of the composition fairly simple, not going far beyond a song-like melody and accompaniment arrangement. With these limitations set, my main tools were transformations of the primary material. The main musical components I focused on were melodic variations, harmony, and rhythm (primarily in the form of ostinatos), all of which were controlled by various game parameters.

While video game music is unique in its processes, the beginning of the process is no different than any other type of mixed-artform composition, and much like in scoring a film, I needed to work out the main motivic ideas that would best represent the accompanying project; as I am working with Stefan on his helicopter game, I needed to make sure my ideas fit that context. When writing the initial version of the composition, I selected a theme and accompanying orchestration that I felt would complement the themes and energy of the game itself. My first, misguided, thought was to approach it as a military-inspired theme, using a theme that included the same types of horn melodies and snare drum patterns were reminiscent of military drum rudiment also used in scores from military-focused films like Saving Private Ryan and Captain America (the scores of which were in-turn inspired by Aaron Copeland’s use of these same elements). This was prior to having a chance to play the game, and upon spending a few minutes with it, I discovered the overall tone of my themes was incompatible.

My original theme

2. Excerpt from Captain America Main Titles by Alan Silvestri, from Captain America: The First Avenger (2011)

Before delving further, I will need to define some terms for clarity. The first term is ‘pace’, which when describing the game, means the speed and energy of the gameplay. This includes both the speed of the helicopter the player is piloting, as well as the rate at which events in the game happen. In musical terms, it is perhaps more obvious, being a combination of tempo and rhythmic space and frequency within the music.

The second term is ‘tone’. Not to be confused with the more musical definitions, here I am referring to both the pace and the intended emotional response generated by the combine elements of the game (e.g., the game’s narrative, art style, and genre).

Another primary area where my composition conflicted with the intended purpose of this project was in the rhythms I selected. As my intention with this project was to compose music that is highly flexible and able to pivot on a moment’s notice, I found that the rhythmic structures of my original themes were too inflexible due to their distinct rhythmic signatures, and that altering these figurations in any meaningful way broke any sense of cohesion or fluidity; even relatively small adjustments meant that they would not be perceived as a natural variations or evolutions of a singular motif, but would rather seem a different one entirely.

Thus, I had to entirely recompose the music to fit the purpose. In my second attempt, I began with a more subtractive approach. I started with a bar of 12/8, containing a steady 12-note rhythm of eighth notes, and then removing them at different points where I felt it would highlight different, unique rhythmic fixtures, while still sounding like developments of each other. These small changes allowed for the same basic pulse and rhythmic energy to be carried through each sequence, while being distinct enough to be perceived as developments of the same musical ideas.

3. The ‘prime’ rhythmic pattern

However, difficulties quickly arose with this rhythmic pattern. I began with a simple, driving ostinato, which I had formed as the basis for the piece. Coming from a background of writing rock and metal songs, which tend to prominently feature repeated guitar riffs and drum beats, it is my tendency to work with and around these types of fixtures, adapting the music around them and developing them in such a way as to forestall the onset of ear fatigue. The danger with material that loops endlessly, however, is the mental-burrowing power of short patterns tends to be strong, and this particular one was no exception. My main solution to this was to create additional ostinatos that would be used for various purposes within the game, though I had not yet determined how they would be used (nor how many I would need).

In terms of the world and narrative design of the game, it primarily involves flying over an open, glacier-filled ocean. I wanted to take some cues from that oceanic environment, and so looked to a game where the ocean was a main component: The Legend of Zelda: The Wind Waker (2003), the score composed by Koji Kondo. Admittedly, the ocean is about the only thing the two games have in common, but as a central visual concept, it seemed like a good starting point.

4. Excerpt from sailing theme from The Legend of Zelda: The Wind Waker (2003)

Wind Waker is a light-hearted adventure game with a cartoon-like art style, and thus was generally much brighter in tone and mood, and I knew from the start I would have to find a way to darken those ideas, as Stefan’s game is more action-oriented, and primarily involves shooting targets (mostly ice bergs and unmanned drones) with missiles and a machine gun. Nonetheless, my second attempt still ended up more fitting for a cartoon world, and thus was not fit for purpose. There was also a cheeriness, as well as a ‘bounciness’ brought on by the alternating long-short note lengths in this version that would have caused a disconnect between what the player would expect to hear musically in the game’s environment—what Michel Chion calls ‘Audiovisual Dissonance’ (1994, pp. 38)—breaking any sort of player immersion.

5. My second attempt

The third time is the charm, as they say, which proved to be the case here. My third iteration yielded what would become my main melody, and the main component of the music. I settled on a more ‘heroic’ melody, invoking adventurous themes from both Wind Waker and John Williams’ film scores; Stefan’s game is largely built around defending a large ship carrying supplies to various places, that is attacked by various nefarious enemies (including ice bergs). I also felt that the melody’s rhythmic diversity, coupled being built around scale runs and arpeggios, would allow for greater developmental potential.

6. The final ‘prime’ melody

Once I had my main ideas established, I utilised a ‘shotgun’ approach, creating a large quantity of variations and permutations of these ideas. By the end of that process, I had nearly 300 bars of material to sift through, all variations of the same ideas (ostinatos, in particular). On one hand, I would say this is not the most efficient approach to this, but on the other hand, I still needed many variations in order to find the ones that best fit together. It is perhaps worth noting that when it comes to AI-generated music, the process more or less works the same, only in that case, it’s the AI generating the vast quantities of material to sift through, rather than me (but it still must be sifted through by a human, at least at this point in time).

The obvious inefficiencies of this stem from the fact that much of what I composed was not used and therefore was discarded. The other issue that arose from this is that the more material there is to work with, the more possible combinations there are, and thus the more time and effort is required to find the thread that connects them all together (or put another way, finding which collective ideas will form the threads best fit for my purposes).

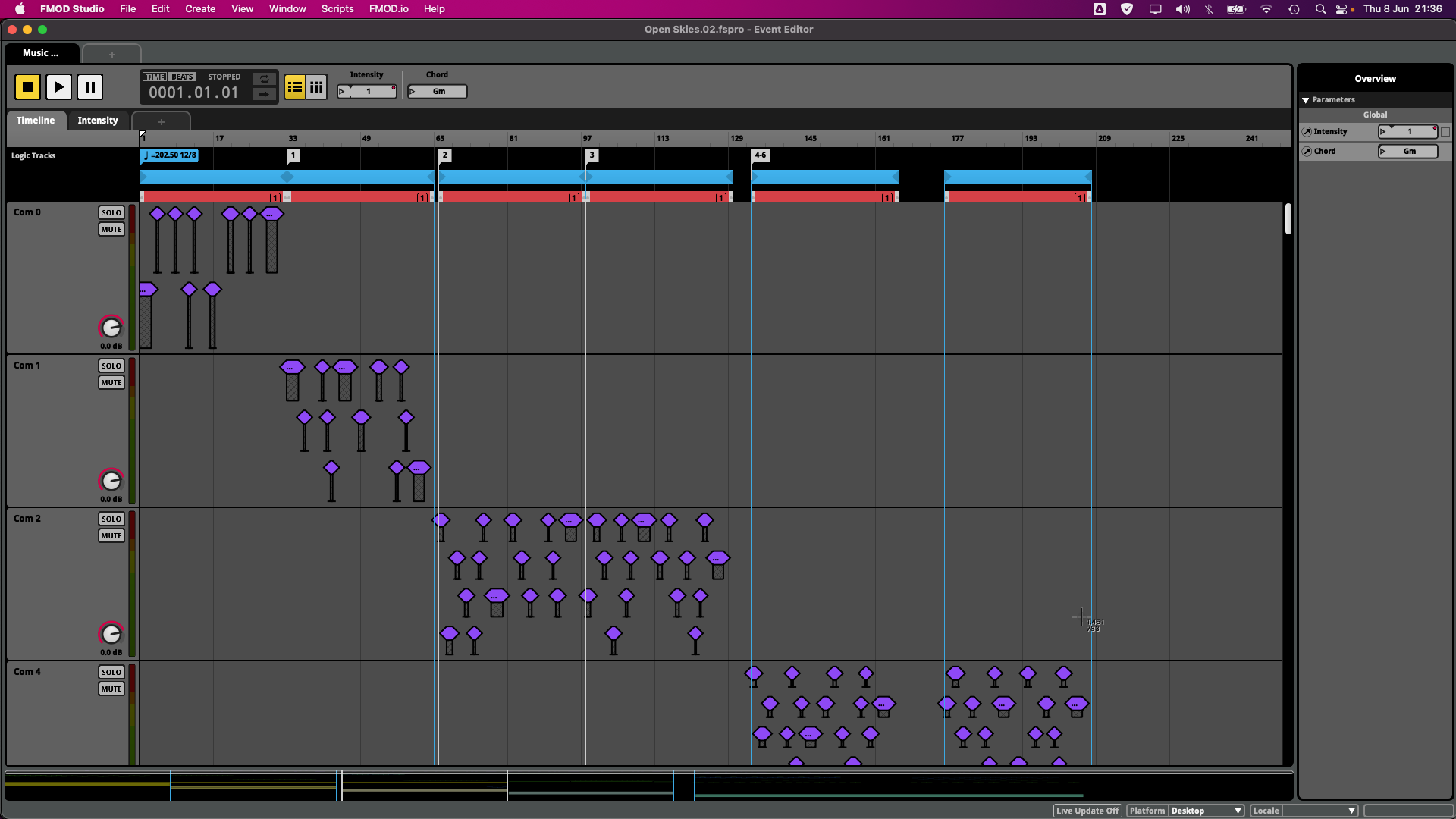

From there, it was a process of extracting the various ideas, creating audio from them, and then placing them into FMOD and isolating the ideas that interact best with each other. This was a time-consuming process that required significant amounts of trial and error, both with mixing the various permutations together, as well as experimenting with different modes of control, or parameters, within FMOD.

After choosing a broader preliminary set of variations, I then designed 3 parameters to control their various expressive functions: ‘dynamics, ‘melody, and ‘pattern'. ‘Dynamics’ is self-explanatory, simply being the musical dynamic used (initially ranging from mezzo piano to fortissimo); the ‘melody’ parameter selected from 10 different melodic variations (I chose 10 as it was a manageable amount, but swapped others in and out as I tried them against the main melody); and ‘pattern’ selected from a variety of ostinatos in the accompaniment (I chose 4 in this case, though at the time, I had anticipated as many as 10 being used). All this together amounted to what was in essence a primitive working prototype in FMOD, where I was able to manually change the parameters at will to see how the various parts interacted with each other; it was a crude prototype, however, working monophonically, where one audio file would play, and then immediately get cut off when switching to a new one, losing its natural decay in the process.

Unlike this prototype, the final version functions similarly, but rather than crudely jumping monophonically between samples, each sample would be placed within a ‘scatterer instrument’ along the timeline in FMOD. These scatterers solve the monophonic problem by triggering their given samples at set intervals—in this case, 1 bar; once an audio file is triggered, it will play it in full until the end, thus retaining its natural decay and making the disparate sections sound as if they are a single melodic line. For melodic sections, scatterers were placed along the timeline at the beginning of each bar; for the accompaniment, i.e. the strings, as they are largely repeating patterns, I created round robins, or multiple versions of the same sample, so as to avoid sounding overly digital and artificial (much like the ‘machine gun’ effect caused by repeating the same drum sample repeatedly).

7. Scatterers placed along the timeline in FMOD

The main challenge the majority of this project was tying it all together. I had 3 parameters, harmony, melody, and ostinatos, and a plethora of material that did not yet seem cohesive as a system. I gradually started whittling down the material and removing the ill-fitting pieces, and as I did, things slowly became clearer, and I was able to consolidate parameters.

The first revelation I had then was that rather than have a separate parameter for dynamics, it would make more sense to choose melodies that would better fit each dynamic needed, so I removed that parameter and consolidated it into the ‘melody’ parameter. Once I had established that, I needed to revisit my melodies again, this time more intentionally and with less of a ‘shotgun’ approach.

I had discussed with Stefan having a neutral, or minimum, game state, which would eventually be triggered by a lack of activity in the game, such as the player setting down the controls for a specific length of time. To reflect this state of inactivity, my first thought was to partially transpose the melody to the parallel major, as well as move it from the horns to the wood winds.

8. Neutral melody, first iteration

While I felt that the reduction in energy, especially from the changes in orchestration, accomplished what I had intended, I was again confronted with the issue of a mismatched tone, and once again, it was too tonally bright. As a result, I returned it to its original key of G-minor, but felt that, as the energy in the game is reduced, it would be best to similarly reduce the energy in the melody. There were a few ways I accomplished this, the first of which was to thin it out in some places, such as in bar 2, where I omit a note. I also made some articulation adjustments, as I felt that the prominently featured attack of the staccato notes gave the perception multiple ‘stabs’, and so I changed those notes to legato, which could instead be perceived as a single, sweeping movement. The final reduction in energy was through the melodic contours, which sometimes was as simple as lowering key notes in the phrase, but also includes adjusting arpeggios to chords with less movement.

9. Final neutral melody and orchestration

The next step for the neutral state was the ostinato. This was actually the first variation of the primary ostinato I created, and it is one of the few things that I never felt the need to revise. As I mentioned earlier, I began with a subtractive approach, and for this ostinato, I simply removed a note on beat 3, one of the typically strong beats in 12/8, to again reduce the sense of movement compared to the prime form. It also isolates the notes between beats 2 and 3, giving it a stuttering effect, as if the game itself is uncertain.

10. The neutral ostinato

While I was still working out how to make it all work together, I knew I needed to go in the opposite direction and go for more aggressive components. In this case, I began with the ostinato. Browsing through the many variations I had created, I selected one that seemed to have the most energy out of all of them. Not only did it emphasize every beat strongly, it was also a longer pattern, with the second bar of it containing a hemiola, which added additional emphasis on the altered subdivisions, and thus the perception of more movement and even what some might consider, in non-musical terms, faster (in the same way that some without the vocabulary have described 1/8th notes as faster than ¼ notes). By virtue of being twice as long, this pattern was also more likely to avoid the aforementioned ear fatigue that plagued the others.

11. The ‘exciting’ ostinato

This pattern also gave opportunity for some unique melodic adjustments. As the subdivisions change in the second bar, so the melody needed to conform to the hemiola. I condensed the dotted-quarter tenuto notes to simple ¼ notes, but that also added extra rhythmic space between them and the already long opening note, which diminished the perceived energy and movement, rather than increased it. To counter this, I in part drew again from John Williams’ horn melodies and added some staccatissimo embellishments, which allowed me to retain the basic melodic contours, make it more exciting, i.e., increase the energy, and added a distinct signature to this form of the melody.

12. ‘Exciting’ ostinato with melody

When creating material for the even higher intensity levels, I knew I needed to deviate further from the prime melody. I wanted to exploit the distinctive staccatissimo arpeggios of the prime form and base this version around that. The task was simple enough, and largely involved arpeggiated the main notes of the melody. This ensured that it would be compatible with its neighbouring forms, and could move seamlessly to and from them; this specific point required careful attention across all variations and their neighbours, especially as some fragments ‘hang over’ the bar line so they can play their full value, and there were times where I had to make adjustments to compensate for this (either by shortening the note, or moving the first part of a phrase to the end of a previous sample, making them equal lengths). I also wanted to have some developments where, so far, the other forms had not had them, mainly the aforementioned distinctive arpeggios. I made some harmonic alterations to them, though they are mostly subtle (such as changing the Eb to a G-minor by lowering the Eb to a D), but expanded (or contracted, depending on how you want to look at it) them into triplet forms, adding passing tones to fill them out without changing the starting and end distances.

I did not write this version with any particular pattern in mind, but for simplicity’s sake, but I feel an addition ostinato pattern might be superfluous at this point (though I would reflect on again that later), and paired it with the previous pattern to see how they interacted. While the subdivisions mostly line up, there are some points where the accents fall in different places. I initially adjusted them to make them fit, but it seemed to make the melody too predictable and less interesting. The offset accents also created what I would consider desirable rhythmic dissonance fitting for music akin to a cue for an action movie.

13. Arpeggio-heavy transformation with ‘exciting’ ostinato

I further experimented with a variety of different types of motivic transformations. One of these being through the use of modulating to various parallel modes. In one case, I wanted to create a more action-focused version of the melody, and so I altered the previous version to utilise more dissonant elements, to even further emphasise the ‘action cue-ness’ of it. To accomplish this, I began by transposing from G minor to G Locrian, as the diminished 5th of the tonic chord would add a healthy amount of dissonance. What surprised me in this case was both that the melody seemed to take Locrian in stride (that is, the main difficulty with Locrian is tonicizing a diminished chord, but G still remained the tonal centre even with the diminished 5th), and that a simple modulation did not render the melody as dark as I had expected.

While I continued with some of those alterations, I tempered some and exaggerated others, making some more diatonic and some more chromatic. There was another tool that I have not discussed yet, which was instrumental in capturing the tone I was going for, and that is the harmony.

14. Maximum intensity level, with harmony (simplified voicings)

The harmony I constructed for the overall piece was not overly complex. However, my process for determining it was somewhat non-traditional—in this style, at least. When composing my initial ideas, I included very basic harmony to accompany them, planning to flesh it out more when the other ideas were more complete. One of my intentions with this project, which builds off a similar, but much simpler, project I have done through my studies at the University of West London with Professor Bartosz Szafranski, I wanted to have an element of improvisation built in to it. As the majority of the harmony in this piece comes from the strings, in order to accomplish this, I needed to isolate each specific note played by each instrument section (violins, cellos, etc.) and create separate audio files for them. In my previous work, I had done this with ambient music using synthesizers and digitally processed piano in a more free-form context, where there was no sense of time or rhythm, so that the whole piece was essentially a moving pad (it could be considered a type of electronically-generated micropolyphony). There, as here, I isolated each pitch class and linked them to a parameter in FMOD which would build chords from the collected pitches. Carrying this technique over to an orchestral setting, and especially in a context where rhythm is a central component, greatly increased the complexity, and thus the amount of problems needing to be solved and edges needing to be smoothed.

I crafted the harmony through 3 different pieces of software, beginning with my preferred notation software, Dorico. The bulk of the composing was done there, and as already established, the end product would be placed into FMOD, but to get there, I needed to create the audio samples. To do that required exported the MIDI data from Dorcio and importing into my digital audio workstation (DAW) of choice, which is Avid’s Pro Tools.

Even with all of the time I have spent editing audio in recording studios, the amount required for what I wanted to do still turned out to be a challenge (especially on a laptop keyboard, which has since been usurped). There is some kind of learning curve for any project of this type, even if done a thousand times before, and I did eventually simplify my processes and workflow, greatly increasing my efficiency, but it took some time to get there. The first of which was an adjustment to the 1st violins, which were playing in divisi. As you can see in the score, I have instead split off the divisi into its own violin part, so that there are 3 violin sections instead of 2. Otherwise, I would have to calculate which 2 divisi parts go with which chord, whereas now they are isolated and can go where I please.

Regardless, the editing required was sifting through each chord, and potential chord, I wanted to include, figuring out how I wanted to voice them, and exporting each individual piece as its own audio file. I also needed to space them out appropriately, at 1 bar lengths, so that they will retain their natural decay, without which, the transition to another chord or audio file will be jarring, feeling unnatural and forced (the need is similar to that of dove-tailing parts when a line moves from one instrument to another). Once all of that was done and edited, I would export the individual files, one at a time (towards the end, however, I did find a slightly cruder process that was much faster). This process also applied to the melodic parts, but there were fewer of those needed (all in all, there are over 400 audio files).

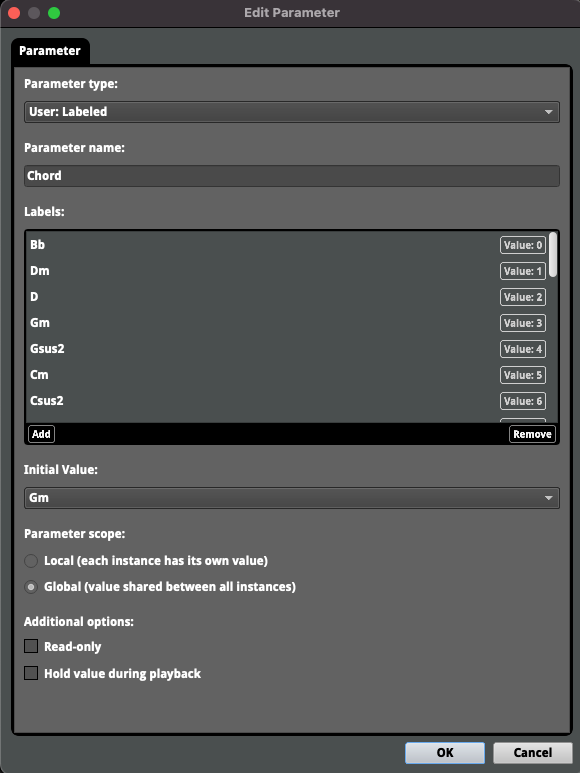

While it was not an entirely linear process, as there was a fair amount of juggling back and forth between the two as either things did not work compositionally or technically, or I felt the harmony would benefit from some adjustments, e.g., chord extensions or quality changes. Going back to where we left off with our most dissonant variant, once I had all of the audio I needed, I setup another FMOD parameter simply called ‘chord.’ FMOD allows for different types of parameters: discrete, which is sequential whole numbers in a range; continuous, which allows for any decimal between whole numbers in a range; and labelled. For obvious reasons, I chose ‘labelled’ for the chord parameter, and simply assigned chords to each parameter value. When I setup the strings in FMOD, I then assigned each pitch to its corresponding chord and voicing (including some I suspected I would not use, but I felt could at least be entertaining possibilities).

15. The chord parameter and a partial list of its values

Compositionally, this allowed me to approach the harmony in a more improvisational way, and as someone that has relied more on my ears than on what’s notated, I found this a comfortable process. It allowed me to run through a variety of chords in a much faster way than through the notation software, as either way, there is some trial and error, but adjusting things in the notation can take considerably more time than scrolling parameters with a mouse. I have also been called a ‘steam of consciousness’ composer, in that I have tailored my creative process so that it allows a large measure of chance, to open up possibilities and what TV painter Bob Ross called ‘happy accidents’ that would create new musical ideas that I would not have otherwise come up with on my own (this indeterminacy is also a core part of video games and their music). I used this same approach to carve out more compelling harmony, though it is worth noting that this improvised approach is not ideal for voice leading.

In addition to the harmony itself, I also wanted a changing harmonic rhythm throughout. Fortunately, I also needed a solution for triggering chords in FMOD, and these would utilise the same one. After some simple research, I discovered FMOD’s ‘command instrument’, which is essentially uses user-created markers to set a variety of functions within FMOD to specific settings, and in this case, I was able to use it to set parameters to specific values. Through this, I set markers at different points on the timeline and assigned them to different chords, which determined my harmony and its rhythm.

16. Command instrument markers (purple) in FMOD

The other advantage to this approach is its potential for randomisation. With the audio consisting of block chords, there is no flexibility in terms of playback; it could only play from specific, pre-arranged recordings. In this case, I was able to include additional embellishments within each part of each ostinato, that would have a random chance to appear any time their assigned chords are triggered. One tricky part of this was that, due to most pitches being assigned to multiple chords, it created an extra layer of indeterminism, and while indeterminism is the goal here, I had to essentially employ my own probabilistic thinking with these, avoiding pitches that were likely to clash with others (especially when it came to chords using non-diatonic pitches). See link at no. 18 for example.

17. D-minor chord with embellishments in bar 5

18. Demonstration of strings with embellishments

Stefan and I then had a deeper conversation about how things would function; we discussed how the game’s possibilities will relate to the music, and in the end, we concluded that we needed a single parameter built into the game to control which pieces are used and when. It took quite a bit of time for me to discover to integrate this idea, but I eventually put it together. I had already consolidated the dynamic parameter into the melody parameter, and I found that I could also merge the ‘melody’ and ‘pattern’ parameters to create a simple ‘Intensity’ parameter. Essentially, I expanded on the idea behind having an a neutral ostinato, and settled on having different intensity levels, each with a corresponding ostinato and melody variant. Once this was setup, I also connected it to the command instrument in order to control the harmonic aspects of the music, which was a simple matter of assigning an intensity value to a given command marker (though this later became redundant, as I will discuss further on).

Stefan also felt that each intensity level should have a ‘cool down’ timer, so that the intensity levels can move quickly from one to another. It would naturally reduce over a period of time without any other input, i.e., an increase, or at least sustaining, of the action; we decided this should be around 10 seconds. Because this would allow the intensity level to change quickly and frequently, it also helps with the problem of ear fatigue, as the ostinato will also change in kind (though I still feel more needs to be done to address this problem adequately).

We established that the parameter governed by the game’s intensity would adjust itself based on 4 in-game variables: the distance of the player from enemies, the quantity of enemies within range, player health, i.e., how close the player is to being destroyed, and the player’s speed. While this aspect of the project lies entirely in the realm of programming, and thus outside of my portion of it, it was still helpful for me to understand, in that I could better see the connective tissue between the music and the game.

As mentioned earlier, the vertical and horizontal aspects of music are especially important in video game music. I had conceptualised many of these elements as vertically aligned and re-orchestrated, but found there were some limitations with how FMOD was able to trigger events. The primary issue I had was of cycling the different ostinatos in the strings. Because of the way I had configured the strings to work, with different samples of each note linked to a chord parameter, I needed multiple branches of parameter-linked conditions. To be more specific, FMOD allows for 2 different logic paths for conditions: ‘and’ and ‘or’. The strings are divided into 2 sets of influences: chords and ostinato patterns. These concepts are related, but not directly, and so I need to be able to have one branch that activates the correct ostinato, and another branch that activates the correct pitch. Given that most pitches are shared by multiple chords, I could only use the ‘or’ logic (meaning ‘either this or this’ can trigger it), otherwise, it would trigger only if both the ostinato and the pitch conditions were met. Had I been able to make a separate branch, this problem would have been easily solved.

After some assistance from Google and YouTube (metaphorically speaking), I was able to find a solution. As game music is primarily based on loops, one of the first things I did was setup a loop region, so that it would loop between the same bars until told otherwise. In addition, I found there is another type called a ‘magnet region.’ The magnet region allows to move from one section to another, but remain in the same relative place, i.e., so that if the music moves while 1/3 of the way through bar 15 in one section, it will move exactly to 1/3 of the way through bar 15 (relative to the start of the magnet region) in the next section. What this basically meant was that I had to mostly abandon the vertical approach altogether, creating independent sections and regions on the timeline for each intensity level. It functions the same as the vertical approach, but is instead laid out horizontally. Aside from some bizarre errors that occur within FMOD that aren’t worth mentioning, this was the final technical problem to solve with the project.

Conclusion

In this project, I sought to develop my own system for hyper-reactive music in video games. While it is unfortunately not yet implemented into Stefan’s game, I still feel it works as a proof-of-concept, if nothing else. However, I do feel that there is more to be done to improve this work, particularly in terms of the composition, as I spent most of my time designing the system and its parameters and discovering the most effective way to have it actually work, both technically and in terms of how the composition fits within that technical framework (mainly, I feel it could use an additional section—which would remove the ear fatigue danger—as well as some additional orchestration). While the project functions how I envisioned it, I still find the results more limited than I had hoped (much of that, however, is that generative music simply needs to be used in a game with many more possibilities than this one to be worth the effort). That aside, I have already used a slimmed down version of the system for a project, and with all the processes and data collated my own head, and it will serve as a good springboard for future works, that I hope will refine and improve upon it.

19. Crude project demonstration in FMOD